Since OpenAI’s chatbot took the world by storm in 2022, the generative artificial intelligence tsunami has grown bigger by the day. Now, the focus is shifting to agentic AI—systems designed to act autonomously. Yet, despite the hype, no universal definition of AI agents exists. Their description and even their components vary significantly, depending on the framework, industry, or expert defining them.

In my view, defining AI agents begins with dissecting their core components. These systems dynamically orchestrate one or more foundational models and tools, manage memory, and coordinate internal processes. They retain full control over task execution methods. And despite everybody’s obsession, they’re fundamentally not chatbots on steroids, with extra features.

I’d go even further. AI agents are a turning point toward interactive systems capable of autonomous comprehension, strategic planning, and independent action.

The critical distinction between human and AI capabilities lies not in what can be accomplished. Humans are fully capable of these tasks. But in how they are executed. AI agents operate at unprecedented speed and scale that humans simply cannot replicate.

In this article, we shall dive into the world of agentic AI and define what agents are and what they are not. We’ll then take a comparative look at traditional automation and learn when agents should be used instead of programmatic workflows. Next, we shall investigate their components and how to use them. We shall end our journey with uncovering the benefits AI agents bring to companies across industries and verticals. That being said, let’s go 😎

What are AI agents?

Autonomous software entities

AI agents are advanced software systems designed to operate independently in dynamic environments. They’re not like conventional programs that follow predefined code paths. Instead, agents integrate perception, reasoning, and action to achieve goals without constant human oversight.

For example, an AI agent managing a smart home might:

- Analyze energy usage patterns.

- Adjust thermostat settings based on weather forecasts.

- Notify the user of anomalies.

And it will perform all these actions without manual input.

The concept of AI agents builds on decades of research in artificial intelligence, robotics, and cognitive science. Early precursors include expert systems, such as:

- MYCIN – Backward chaining expert system based on AI and used to diagnose bacterial infections

- Shakey the Robot – Built in the 1960s, it was the first general-purpose robot able to decide for its actions, combining perception and navigation.

Modern agents, however, leverage machine learning and cloud computing to handle far more complex, multi-step tasks.

Key characteristics

AI agents are defined by three foundational traits that set them apart from traditional automation tools.

First, they are goal-oriented. Every AI agent is programmed or trained to pursue and complete specific objectives. For instance, a financial trading agent might aim to maximize portfolio returns while minimizing risk. This goal-centric approach allows agents to prioritize tasks dynamically rather than blindly following a fixed script. Another example, an agent could decide rerouting resources during supply chain disruptions.

Second, they are flexible and adaptive. Agents continuously refine their strategies based on new data. A customer support agent deployed by an e-commerce platform, for example. It might initially struggle with complex returns involving international shipments. Over time, however, it learns to recognize regional customs regulations and suggest appropriate solutions. Learning from past interactions leads to reducing escalations to human agents.

Third, they exhibit self-optimization capabilities. Through feedback loops, agents improve their performance autonomously. Consider a cybersecurity agent monitoring network traffic. By analyzing past intrusion attempts, it can refine its anomaly detection algorithms to reduce false positives.

Agents vs. traditional automation

Traditional automation relies on workflows that follow rigid, step-by-step rules. A good exemplification are email autoresponders that trigger predefined replies.

The distinction between AI agents and rule-based workflows lies in their approach to problem-solving.

Traditional automation, such as robotic process automation (RPA), excels at repetitive tasks with predictable inputs. For example, an RPA script might extract data from invoices and populate accounting software fields. However, it fails if invoice formats change or unexpected errors occur. This is a common issue in industries like healthcare, where 70 percent of claims are initially denied due to missing or mismatched data.

AI agents, conversely, handle unstructured scenarios by making context-aware decisions. They navigate ambiguity by combining multiple techniques. Here’s another example of a claims-processing agent that could:

- Use optical character recognition (OCR) to read handwritten or scanned documents.

- Cross-reference patient records with insurance policies using natural language processing (NLP).

- Contact healthcare providers via email to resolve discrepancies.

- Reprocess corrected claims automatically.

This flexibility will drastically reduce processing times compared to manual workflows, according to industry case studies.

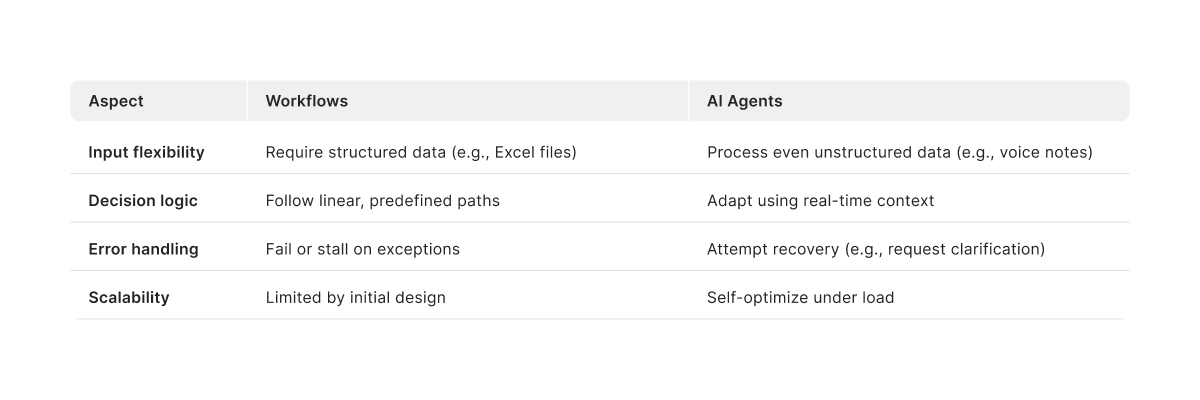

AI agents vs. workflows

Workflows and AI agents differ significantly in three areas. First, workflows rely on fixed logic, while agents adapt to new scenarios. Second, workflows require structured data inputs, whereas agents interpret unstructured data like voice commands or handwritten notes. Third, workflows have limited scalability due to their static design, while agents self-optimize to handle growing demands.

While workflows and AI agents both automate tasks, their applications differ fundamentally:

Case study

To better understand the differences, let’s take a look at a real-life example in processing of insurance claims.

In the workflow approach, a legacy system would deny claims if any field in a submitted form is incomplete. Employees then manually contact customers to resolve issues, leading to long processing times.

In an agentic AI approach, an agent would perform multiple steps, such as:

- Use OCR to extract data from scanned documents.

- Identify missing fields via NLP.

- Automatically email customers with tailored requests (“Please resubmit page 2 of Form XYZ”).

- Reprocess claims upon receipt.

This reduces processing times to 3 days and cuts manual labor by 50 percent.

Hybrid systems

Some organizations blend agents and workflows. For instance, a healthcare provider might use:

- Workflows – Standard patient intake forms

- Agents – Analyze unstructured doctor’s notes to suggest diagnostic tests.

Both workflows and agents serve distinct purposes. Agents excel in scenarios requiring dynamic decision-making. In such cases, a flexible AI system must design the workflow itself in real-time, rather than adhering to rigid, predefined steps.

Building blocks of generative AI agents

How do AI agents work?

AI agents operate through a three-layer architecture inspired by the biological system.

First, there are sensors, namely the perception layer. These components collect raw data from the environment. Modern agents use diverse input channels:

- Text – Chat interfaces, emails, or API feeds (e.g., CRM updates)

- Visual – Cameras or LiDAR for autonomous vehicles

- Audio – Voice assistants like Alexa processing spoken commands

- IoT – Temperature sensors in industrial equipment

Take for example agricultural agents analyzing crop health. They might ingest satellite imagery, soil moisture readings, and historical yield data.

A processor then analyzes this data using tools like large language models (LLMs) or reinforcement learning algorithms. This reasoning layer transforms raw data into actionable insights using techniques such as:

- Symbolic AI – Rule-based systems for structured tasks like tax calculations

- Machine learning – Neural networks identifying patterns in unstructured data (e.g. sentiment analysis in reviews)

- Hybrid models – Combining LLMs for creativity with mathematical optimization for logistics

Finally, actuators execute actions based on the analysis. This orchestration layer is able to execute various types of actions, such as:

- Digital actions – Sending emails, updating databases, or triggering alerts

- Physical actions – Robotic arms assembling products or drones delivering packages

- Hybrid actions – A voice assistant placing a grocery order via API while scheduling delivery via a autonomous vehicle.

In comparison with basic LLM chatbots, agents require state management and tool execution. State management is the ability to retain messages/event history, store long-term memories while executing multiple LLM calls in an agentic loop. Tool execution is the ability to safely execute an action output by an LLM and return the results.

The controller

The controller orchestrates the AI agent’s actions. An agent employs diverse foundational models as decision-making frameworks depending on the task’s complexity.

Rule-based logic follows predefined if-then paths. It’s ideal for simple tasks and for compliance-heavy industries. For example, a banking agent might follow rules like:

IF a transaction exceeds $10,000 AND originates from a high-risk country → THEN flag for manual review.

Foundational models are at the core of AI agents and they are served through an inference engine.

Machine learning models (MLM) help agents make predictions. For example, a loan approval agent trained on historical data can predict default risks with high accuracy, outperforming human underwriters.

Generative AI, powered by LLMs like GPT-4, enables dynamic responses for creative tasks such as marketing copy generation. A travel agency’s agent could draft personalized itineraries by synthesizing user preferences (adventure sports, vegan dining, etc.) with local event calendars.

Reinforcement learning (RL) allows agents to optimize decisions through trial and error. This can be seen in gaming bots that adapt to player behavior. At this time, real-world deployment in safety-critical environments (e.g., warehouse robots) remains limited. Most systems still use supervised learning and traditional control for predictability.

The centralized decision-making process can be further refined by providing examples to showcase the agent’s capabilities. Basically, this will further teach the agent how to think and/or react in certain conditions.

Memory systems

AI agents are stateful. They are defined by persistent states such as:

- Their conversation history

- Their memories

- External data sources used for retrieval augmented generation (RAG)

Agents rely on two types of memory systems to enhance decision-making.

Short-term memory is also known as working memory. It handles context windows for immediate tasks, such as retaining chat history during a customer interaction.

Long-term memory is comprised of all information that sits outside working memory. It can be accessed at any point in time. For example, a medical diagnosis agent might retrieve similar patient cases from the past decade to guide treatment plan.

AI agents utilize both types of memory at the same time. Take Netflix’s recommendation agent as an example. It combines your current viewing session (short-term memory) with your watch history (long-term memory) to suggest shows.

Tools

Agents utilize tools to expand their capabilities beyond their core programming. These tools are a set of predefined extensions, functions, and data stores that grant the agent’s controller access to real-world data processing. By executing these specialized operations, agents can both retrieve external information and perform practical tasks. Such tasks would otherwise be impossible through their foundational models alone. With tools, agents can perform a wider variety of tasks, with more accuracy and reliability.

The model generates structured output that clearly defines which tool to use.

Extensions bridge the gap between various APIs and the agents in a standardized way. They enable agents to execute API calls regardless of their underlying implementation. The key strength of extensions relies in their built-in example types.

Functions are self contained modules of code that accomplish a specific task. A model can decide which function to use for each specific task and what arguments that function needs based on its specs. What it cannot do is make a live API call and that’s one of the main differences between extensions and functions. It’s highly important from the security perspective because it prevents agents from making direct API calls. Furthermore, functions are executed on client-side, while extensions on the agent-side.

Data stores provide the foundational model access to updated and more dynamic information, which ensures that their response remains relevant and grounded. Through data stores, agents receive additional data in the model’s original format. Therefore, they eliminate the need for costly and time-consuming model retraining or fine-tuning.

Agent frameworks

Agent frameworks orchestrate foundational model’s calls, manage the agent’s state, determine the structure of the agent’s context window, and define cross-agent communication in multi-agent instances.

Tools utilized by the controller are compatible across various frameworks, making agents compatible across frameworks as well.

Types of AI agents

Rule-based agents

Rule-based agents operate within predefined workflows, making them ideal for repetitive tasks like assembly line quality checks. However, their rigidity limits their ability to handle scenarios outside programmed rules, such as unexpected machine failures.

Autonomous AI agents

Autonomous agents will excel in dynamic environments. In a not-so-far-away future, self-driving delivery robots will be able to reroute around roadblocks without human intervention. Their strength will lie in their ability to process real-time data and make independent decisions.

Collaborative AI agents

Collaborative agents work in multi-agent systems to solve complex problems. Warehouse robots sharing inventory data to optimize restocking schedules are a prime example. This distributed approach enhances scalability and efficiency in large-scale operations.

At this time, multi-agent coordination still poses a lot of challenges such as competition for resources and communication overhead.

How to build AI agents?

Agents achieve objectives through structured cognitive frameworks, leveraging iterative reasoning to analyze data and make context-driven decisions. By continuously refining their actions via feedback loops—mirroring human learning processes—these systems progressively enhance their performance.

To master agent development, let’s deconstruct the core components that enable such autonomous, adaptive behavior.

Perception layer

The perception layer interfaces with the physical and digital world through various data ingestion tools, such as:

- APIs – Pull real-time stock prices or weather data, access customer details with a CRM integration

- Web scrapers – Extract competitor pricing from e-commerce sites

- IoT sensors – Monitor factory equipment vibrations for predictive maintenance.

Through preprocessing, raw data is cleansed and normalized. For example, a manufacturing agent might convert sensor readings into a standardized JSON format for analysis.

Reasoning engine

The brain of the agent—the reasoning engine—decomposes tasks into subtasks and formulates strategies.

For example, when planning a corporate event, an agent might break the request into:

- Book venue – Evaluate options, check availability, negotiate rates.

- Send invitations – Extract employee emails, create the message based on the information obtained in the previous step, send the emails, track RSVPs.

- Arrange catering while considering dietary restrictions.

Tools like LLMs handle creative tasks, while mathematical solvers optimize logistics. The brain can use various reasoning techniques, such as:

- Logical inference – IF budget < $10k, THEN exclude premium venues.

- Constraint optimization – Maximize attendee convenience while minimizing costs.

- LLM-based planning – Generate creative themes like AI-powered sustainability summit.

Orchestration layer

At the core of the agent’s cognitive architecture lies the orchestration layer. It is responsible for maintaining memory, agent’s state, reasoning, and planning. By leveraging prompt engineering and structured frameworks, this layer streamlines decision-making. This enables the agent to dynamically interact with its environment and optimize task execution.

Agents execute decisions through three primary pathways:

- API-driven actions (e.g., a travel booking agent reserving flights via third-party platforms)

- RPA workflows for legacy system integration

- Physical actuators (e.g., a smart infrastructure agent adjusting HVAC temperatures in real-time)

Agents can offload tasks exceeding their capabilities. For instance, a legal assistant agent might draft contracts using LLMs but escalate ambiguous clauses to human experts for review. This hybrid approach combines AI efficiency with human expertise for precision.

The agent’s foundational setup includes:

- System prompt design (e.g., role definition, response constraints)

- Available tools (APIs, functions, data sources)

- Behavioral guardrails (safety/ethical rules limiting harmful actions)

Due to current limitations in autonomy, the architectural design also retains the agentic system’s structural topology. The topology includes the predefined relationships between components like databases and APIs.

Memory infrastructure

Short-term memory is constrained by the model’s context window due to computational limits. System prompts can indeed expand this working memory. However, larger prompts reduce the model’s ability to effectively process all provided information. Additionally, scaling this approach increases computational costs.

By contrast, long-term memory uses databases to archive historical interactions and knowledge. Agents leverage both:

- Structured databases (e.g., SQL) for organized data retrieval

- Unstructured vector databases for flexible pattern recognition and experience-based learning

Episodic memory stores records of the agent’s past actions and interactions. Over time, older entries may lose relevance. Therefore, they necessitate filtering mechanisms to prevent outdated or trivial data from overwhelming the working memory.

Semantic memory encodes the agent’s understanding of the world and its own identity. Vector databases store semantic relationships. It relies on:

- Vector databases to map conceptual relationships (e.g., clustering customer complaints like “shipping delay” vs. “wrong item” for response efficiency)

- Knowledge graphs to link entities (e.g., a medical agent associating “fever” with “malaria” and its treatment “artemisinin”)

Procedural memory stores the agent’s codified knowledge of how to perform tasks, such as predefined routines or learned skills (e.g., formatting API calls).

When to use AI agents (and when not to)

Here’s a practical framework for deciding.

Use deterministic workflows when your process is rule-based, predictable, and rarely encounters edge cases. Coding fixed logic is simpler, faster, and cost-effective in these scenarios.

Switch to AI agents when workflows fail unpredictably or require real-time adaptation. Agentic systems thrive in dynamic environments with shifting variables, ambiguous inputs, or evolving goals. They offer the flexibility that rigid code can’t match.

In short: predefined rules handle stability; agents solve complexity.

Ideal scenarios

Due to their flexibility and decision-making abilities, AI agents thrive in environments requiring complex decisions or adaptability.

Ideal use cases involve dynamic environments where real-time adjustments are critical. For example, autonomous delivery agents reroute around traffic accidents by analyzing live GPS data and traffic camera feeds, reducing urban delivery delays. Similarly, disaster response agents coordinate drones, chatbots, and supply chains during emergencies, accelerating rescue operations. Education agents also demonstrate value by personalizing lesson plans based on student performance data.

Poor use cases

Agents are overkill for simple tasks. Basic workflows handle repetitive actions such as file format conversions more efficiently. Agents also struggle in low-data environments. Diagnosing ultra-rare diseases with fewer than 100 known cases, for instance, leads to unreliable predictions due to insufficient training data.

Furthermore, AI agents are poorly suited for ethically sensitive tasks. High-risk domains like autonomous weapons systems pose unacceptable risks due to the lack of human ethical judgment. Fully autonomous surgery is still not an option due to safety challenges that current technology cannot fully address. Another example—which is already a problem—facial recognition agents pose privacy risks.

Risk mitigation

To mitigate risks, organizations should adopt human-in-the-loop (HITL) systems, where agents flag uncertain decisions for human review. Radiology AI tools, for example, highlight ambiguous X-rays for doctor evaluation, ensuring accuracy while maintaining efficiency.

Explainability tools like LIME (Local Interpretable Model-agnostic Explanations) clarify decision logic. An example would be denying loans due to high credit utilization. Regular ethical audits further ensure fairness, such as retraining hiring agents if biases toward specific universities are detected.

Benefits of AI agent adoption

The adoption of AI agents delivers measurable improvements in efficiency, accuracy, and innovation.

Operational efficiency

Automating complex workflows leads to operational efficiency.

For example, manufacturing agents optimizing production schedules based on machine health data. According to industry research, they reduce downtime by 40 percent and boost output by 18 percent.

In finance, automating invoice processing saves companies $16 per invoice. For firms handling 10,000 invoices monthly, this would mean $160,000 in annual savings.

Error reduction

AI agents minimize human error in critical tasks. Pharmacy agents cross-referencing drug interactions prevent over 200,000 medication errors annually in the U.S. healthcare system. Similarly, fraud detection agents at PayPal greatly reduced false positives, saving $700 million yearly in investigative costs.

Such systems minimize risks that human oversight might miss, particularly in high-volume or high-stakes environments.

Innovation acceleration

By enabling rapid experimentation, AI agents also accelerate innovation. Drug discovery agents at Insilico Medicine identified a novel fibrosis treatment target in 21 days, a process that traditionally takes 2–3 years. Additionally, logistics agents optimize delivery routes using real-time weather and traffic data, cutting fuel costs while improving delivery times.

Challenges and ethical considerations

Security risks

AI agents introduce significant security risks due to their handling of sensitive data. In 2022, a healthcare AI provider suffered a breach that exposed 1.8 million patient records when hackers exploited unsecured API endpoints in a diagnostic agent.

To counter such threats, organizations must implement end-to-end encryption for data in transit and at rest, coupled with zero-trust architectures that require continuous authentication. Third-party security audits, such as SOC 2 Type II certifications, are increasingly adopted to validate compliance.

Lately, we’ve seen attackers manipulate agents via disguised inputs. Also, model extraction attacks happened, which reverse-engineer proprietary algorithms. Such emerging threats demand proactive defenses, like input sanitization and rate-limiting API access.

Bias mitigation

Bias in AI agents remains a critical ethical challenge. A 2023 incident involving a Fortune 500 company revealed that its hiring agent systematically downgraded resumes due to biased training data. To address this, developers employ debiasing techniques like reweighting training datasets and adversarial training to force agents to ignore sensitive attributes.

Continuous monitoring with tools like IBM’s AI Fairness 360 helps detect disparities in real-time.

Transparency

Transparency and explainability are essential for building trust and complying with regulations like the EU’s proposed AI Act, which mandates explanations for automated decisions. Techniques such as SHAP values quantify how specific factors contribute to outcomes. On the other hand, counterfactual explanations show users how altering inputs could change results.

Regulatory frameworks like GDPR’s Article 22 grant users the right to contest automated decisions. Furthermore, California’s Algorithmic Accountability Act requires impact assessments for high-risk agents.

Accountability

Accountability and legal liability pose complex challenges when agents cause harm. In 2021, an autonomous delivery agent collided with a pedestrian. This sparked a lawsuit where liability was disputed between the developer, operator, and hardware vendor.

Clear contractual frameworks that define responsibility for software flaws and specialized AI liability insurance are emerging solutions.

Environmental impact

The environmental impact of AI agents cannot be overlooked. Training a single LLM generates approximately 626,000 pounds of CO2, equivalent to the lifetime emissions of five cars. Mitigation strategies include using energy-efficient sparse models and transitioning to energy-optimized data centers.

Societally, the IMF estimates that AI will affect almost 40 percent of jobs around the world by 2030. And some countries already took action. Germany, for example, has already established the AI & Workforce 2030 initiative to reskill workers for roles overseeing AI systems.

Ethical governance

Ethical governance frameworks, such as the OECD’s Principles on AI, emphasize human-centric design. Microsoft’s AETHER ethics board exemplifies this by evaluating projects for societal risks. Barcelona’s Decidim platform engages citizens in decisions about municipal AI deployments like traffic management systems.

Looking ahead, autonomous weapons systems highlight the urgency of international regulation. Similarly, agents capable of generating deepfakes necessitate tools like DeepMind’s SynthID to watermark synthetic media and prevent misuse.

The future of agentic AI

AI agents represent a paradigm shift in automation. They are already empowering organizations to address challenges once deemed too intricate or fluid for conventional software. Across industry sectors, agents are driving efficiency, reducing errors, and accelerating innovation.

However, AI agents aren’t magic boxes—they’re engineered systems requiring deliberate implementation. A phased, strategic rollout is essential:

- Launch pilot programs in bounded use cases to assess viability.

- Prioritize transparent AI tools to audit decision pathways.

- Embed human-in-the-loop safeguards for accountability and ethics.

As generative AI matures and edge computing decentralizes processing power, AI agents will become more capable. At some point, the line between human and machine decision-making will fade away. Thus, organizations adopting AI agents today will dominate tomorrow’s AI-driven economy. What are you waiting for?

Post A Reply